What's New About Deepseek

페이지 정보

Clement Eads

DK

2025-02-28

본문

With its impressive capabilities and efficiency, DeepSeek Coder V2 is poised to develop into a sport-changer for developers, researchers, and AI enthusiasts alike. Artificial intelligence has entered a new era of innovation, with fashions like Free DeepSeek Chat-R1 setting benchmarks for performance, accessibility, and cost-effectiveness. Mathematical Reasoning: With a rating of 91.6% on the MATH benchmark, DeepSeek-R1 excels in solving advanced mathematical problems. Large-scale RL in submit-coaching: Reinforcement studying methods are applied during the put up-training section to refine the model’s means to purpose and solve issues. Logical Problem-Solving: The model demonstrates an ability to interrupt down issues into smaller steps using chain-of-thought reasoning. Its progressive options like chain-of-thought reasoning, giant context length support, and caching mechanisms make it a superb selection for both particular person developers and enterprises alike. These factors make DeepSeek-R1 a really perfect choice for builders looking for high performance at a decrease cost with complete freedom over how they use and modify the mannequin. I feel I'll make some little venture and document it on the monthly or weekly devlogs until I get a job.

With its impressive capabilities and efficiency, DeepSeek Coder V2 is poised to develop into a sport-changer for developers, researchers, and AI enthusiasts alike. Artificial intelligence has entered a new era of innovation, with fashions like Free DeepSeek Chat-R1 setting benchmarks for performance, accessibility, and cost-effectiveness. Mathematical Reasoning: With a rating of 91.6% on the MATH benchmark, DeepSeek-R1 excels in solving advanced mathematical problems. Large-scale RL in submit-coaching: Reinforcement studying methods are applied during the put up-training section to refine the model’s means to purpose and solve issues. Logical Problem-Solving: The model demonstrates an ability to interrupt down issues into smaller steps using chain-of-thought reasoning. Its progressive options like chain-of-thought reasoning, giant context length support, and caching mechanisms make it a superb selection for both particular person developers and enterprises alike. These factors make DeepSeek-R1 a really perfect choice for builders looking for high performance at a decrease cost with complete freedom over how they use and modify the mannequin. I feel I'll make some little venture and document it on the monthly or weekly devlogs until I get a job.

Unlike many proprietary models, DeepSeek-R1 is totally open-supply below the MIT license. DeepSeek-R1 is a complicated AI mannequin designed for duties requiring advanced reasoning, mathematical downside-fixing, and programming assistance. The disk caching service is now accessible for all customers, requiring no code or interface modifications. Aider enables you to pair program with LLMs to edit code in your native git repository Start a new project or work with an current git repo. The model is optimized for both giant-scale inference and small-batch native deployment, enhancing its versatility. Model Distillation: Create smaller versions tailor-made to specific use cases. A standard use case is to complete the code for the user after they provide a descriptive remark. The paper presents the CodeUpdateArena benchmark to check how nicely large language fashions (LLMs) can replace their information about code APIs which might be repeatedly evolving. More correct code than Opus. This large token limit permits it to course of prolonged inputs and generate extra detailed, coherent responses, a vital characteristic for dealing with complicated queries and duties.

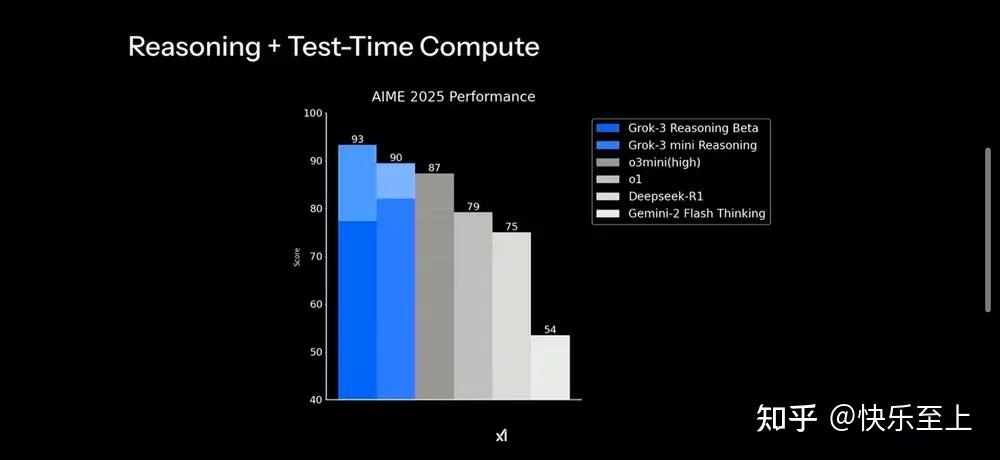

For companies handling large volumes of similar queries, this caching feature can result in substantial price reductions. Up to 90% cost savings for repeated queries. The API provides price-efficient charges whereas incorporating a caching mechanism that considerably reduces expenses for repetitive queries. The DeepSeek-R1 API is designed for ease of use while offering robust customization options for builders. 1. Obtain your API key from the DeepSeek Developer Portal. To address this problem, the researchers behind DeepSeekMath 7B took two key steps. Its results show that it isn't only competitive but often superior to OpenAI's o1 mannequin in key areas. A shocking instance: Deepseek R1 thinks for around seventy five seconds and efficiently solves this cipher textual content drawback from openai's o1 weblog post! DeepSeek-R1 is a state-of-the-art reasoning model that rivals OpenAI's o1 in efficiency while providing builders the pliability of open-source licensing. DeepSeek v3-R1 employs giant-scale reinforcement studying during publish-coaching to refine its reasoning capabilities.

In case you loved this informative article and you would love to receive details concerning Deep seek (dlive.tv) generously visit the web-site.

댓글목록

등록된 답변이 없습니다.